AI Ethics in government: understanding its past, present and reimagining its future

Article highlights

.@theashow joins forces w/ @3AInstitute @LorennRuster to explore the nature of #AI ethics instruments in government

Share articleHow might the nature of AI ethics instruments in gov be reimagined? @theasnow and @LorennRuster want your ideas & feedback!

Share article"Our hypothesis is that underlying value set for gov AI ethics instruments is focused on prevention, risk minimisation, reducing downside" ...but is that all?

Share articlePartnering for Learning

We put our vision for government into practice through learning partner projects that align with our values and help reimagine government so that it works for everyone.

Over the past 5 years, we have seen a range of Artificial Intelligence (AI) ethics instruments — frameworks, principles, guidelines — developed by governments and organisations to steward how AI should be built and used. Although these instruments are generally not enforceable, they can play an important role in shaping the types of conversations governments have and the types of regulatory and policy decisions they may make about the future of AI-enabled products and services.

Underlying any AI ethics instrument is a value set — a value set that we believe needs some further exploration. Our hypothesis is that the underlying value set for government AI Ethics instruments today is focused on things like prevention, on risk minimisation, on reducing downside. Undoubtedly, these things are important. But is that all? Is risk minimisation the ultimate value we are striving to achieve through AI Ethics instruments? Should it be?

But is that all? Is risk minimisation the ultimate value we are striving to achieve through AI Ethics instruments? Should it be?

We believe that the role of government is not just about prevention or protection, but also ‘enablement’ or creating conditions for communities to thrive; both of these things are important. Our current hypothesis is that AI Ethics instruments are currently focused on the first half of the equation so far and taking a dignity-centred approach to AI Ethics could be helpful in moving towards enablement.

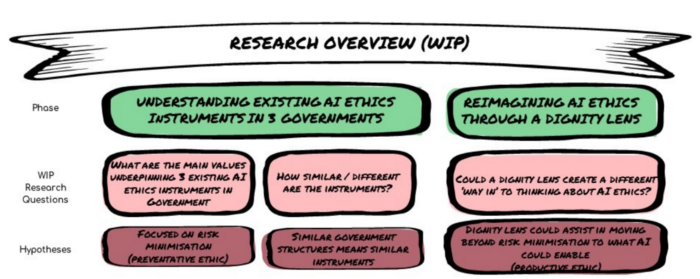

To explore this, we have devised a research project in two phases:

Phase 1: Understanding existing AI Ethics instruments

The Ethics Centre talks about ethics as “the process of questioning, discovering and defending our values, principles and purpose”. It defines values as “things we strive for, desire and seek to protect”, principles as “how we may or may not achieve our values” and purpose as giving life to values and principles. When it comes to AI Ethics in government, generally (and at least initially) a statement of principles emerges (see for example the Australian Government AI Ethics Principles, Canadian Government AI Guiding Principles etc). AI Ethics principles offer clues as to what things have been decided as important to governments.

Unpacking what’s in AI Ethics instruments and how they came to be will form the first phase of our research. This will be achieved through discourse analysis of current AI Ethics documents in the Australian, UK and Canadian governments to understand what is currently encapsulated. We will then attempt to further understand the genesis of how these instruments came to be and how they are currently used through semi-structured interviews.

We go into this first phase with an open mind, fuelled by a hunch that we believe we could begin to reimagine AI Ethics instruments by putting dignity at the centre, and in doing so, enable conditions for thriving communities. We’ll see whether this hypothesis still resonates at the end of Phase 1.

Phase 2: Reimagining AI Ethics in government

Several waves of AI Ethics have emerged over the past 5 years:

First wave: focused on principles and led by philosophers

Second wave: focused on technical fixes and led by computer scientists

Third wave: focused on practical fixes and fuelled by notions of justice

We are interested in pushing the thinking around what comes next. What might a fourth wave look like, and could the notion of dignity have a role to play?

There are a lot of ways to describe dignity. After looking at a range of dignity frameworks across philosophy, law, nursing clinical care, theology and crisis negotiation, at this stage Donna Hicks’ essential elements of human dignity and dignity violations framework resonates. See Figure 2 for an outline of the framework. We would seek to apply this framework to AI Ethics in government and understand whether it gets us closer to enablement. As with the rest of this research, this is work in progress and we are open to your suggestions and feedback.

Figure 2: Donna Hicks © Dignity Framework

Figure 2: Donna Hicks © Dignity Framework

Overall, we intend to:

Understand the values underpinning AI ethics instruments currently used in the federal governments (or equivalent) of Australia, Canada and the UK. We hypothesise they are based on risk minimisation and we will explore this hypothesis through discourse analysis and semi-structured interviews.

Reimagine what government AI ethics instruments could look like. We hypothesise that a dignity lens could allow for ‘enablement’ in addition to the anticipated risk focus.

An overview of our research can be found in Figure 3.

Figure 3: A WIP overview of our research. Graphical template from Slidesgo, including icons by Flaticon and infographics & images by Freepik.

Figure 3: A WIP overview of our research. Graphical template from Slidesgo, including icons by Flaticon and infographics & images by Freepik.

Our ask

This is the very beginning of our research and we firmly believe in the power of collective intelligence to help us ask better questions and interrogate our own biases with greater rigour. With this in mind, we invite:

Feedback on what you’ve read above — does this resonate? What do you find interesting? What is unclear? What’s missing so far?

Ideas — does this spark a new idea for you that we could potentially incorporate or explore together? Or trigger an idea you have already explored that may be relevant?

Potential contacts — are you someone we should be speaking with on this? Is there someone in your network that we should be contacting?

In January and February 2021 we’ll be consolidating our thoughts and speaking with selected stakeholders with a view to publish an initial foundational piece in the first quarter of 2021. We will also continue to post our progress as we go.

To reach out, post in the comments below and/or contact us directly.

This article is also published on Medium here.

AI Ethics in Government

Together with the 3A Institute at the Australian National University, we will explore the nature of AI ethics instruments in government and how they might be reimagined. We invite you to share feedback, ideas and potential contacts as we continue with this research.

You may also be interested in...

How AI can improve access to justice