Open data in San Francisco, California

The initiative

In October 2009, San Francisco’s then mayor, Gavin Newsom, issued Executive Directive 09-06 Open Data. It stated that “this Directive will enhance open government, transparency, and accountability by improving access to City data that adheres to privacy and security policies”. [1] It required City departments to make all non-confidential data sets under their authority available on DataSF.org, the city's website for government data. DataSF.org made publicly available more than 100 data sets from local government, including police, transport authority and public works data. The initiative was designed to increase government transparency and efficiency and open up new economic opportunities. It was codified in the City’s administrative code in 2010.The challenge

In San Francisco, as in other cities worldwide, there has been a call to make local government data available to the public in an accessible format. Apart from its contribution to transparency, it enables software developers to create apps to help citizens and visitors make the most of the city’s resources.The public impact

From the launch of open data in 2009 to 2014, over 200 datasets had been published and 60 apps had been created using it. For example, the city's Department of Environment released recycling data that was used by a third party to develop EcoFinder, an iPhone app that helps residents find recycling facilities.

San Francisco's Chief Innovation Officer announced in June 2012 that access to real-time transit data had resulted in a 21.7% fall in SF311 calls (311 receives non-emergency calls on city and county of San Francisco government matters). This decrease in call volume was estimated to have saved over US$1 million for the city. As of 12 May 2016, there were 374 published datasets. [2]

Stakeholder engagement

Both internal and external stakeholders collaborated in the open data initiative. The mayor and the city administration, especially the Department of Technology and the Department of Public Health, and the city’s inhabitants were the main internal stakeholders. The IT company, Socrata, helped the city develop a data platform, the data visualisation firm, Stamen, created the San Francisco CrimeSpotting app, and Twitter helped with reducing the load on SF311. The NGO, Code of America, assisted with the drafting of the open data standards.Political commitment

Although the open data programme received significant support from the mayor, it was not part of the city administration’s policy nor was there any instance of support across the political spectrum.Public confidence

Gavin Newsom was re-elected as mayor in 2007 after the elections of 2003. He won with 73.66% of the vote, which shows that the citizens had faith in him. However, there is no clear evidence, such as opinion polls or public perception surveys, about the programme.Clarity of objectives

Open Data San Francisco has a clear mission statement: “to enable use of the City's data to support a broad range of outcomes from increasing government transparency and efficiency to unlocking new realms of economic value.”

Additionally it has outlined a Vision Statement: “The City's data is understood, documented, and of high quality. The data is published so that it is usable, timely, and accessible, which supports broad and unanticipated uses of our data.” [3]

Strength of evidence

The idea of the project was based on similar open data programmes in other American cities and in the UK: “in addition to our engagement strategy, we reviewed not only the literature but existing open data plans and practices from NYC, Chicago, Philadelphia, Great Britain and many more.” [4]Feasibility

While in the planning stage, the city administration looked into the cost of launching and running this programme and identified that cost would not be a major bottleneck, as all the data was already available. Whatever the impediments to progress, Brian Purchia, the mayor’s deputy communications director, “pointed out that the most important barrier was not really expense. Most of the data exists already, he says – just not in a format that developers can use. ‘The cost is there, but most of it is just man hours.’” [5]Management

The programme was managed by the chief data officer, Joy Bonaguro, and the open data programme manager, Jason Lallyis. Both had relevant experience in an open data environment in designing and managing systems delivery. This shows that there were skilled managers who understood the delivery context. In addition, there were data coordinators who served as a key point of accountability for timelines and questions about data sets, as well as providing quarterly reports on progress in implementing the open data plan.Measurement

The number of data sets published was used as a metric to gauge the impact of the open data programme. This was due to the fact that the city government lacked other ways of measuring the outcomes and impact of its open data initiative.

In a 2014 report issued by the mayor and the chief data officer, it was admitted that “‘number of datasets published' is a blunt metric. While we need to initially increase the number of datasets, we also need to explore measuring publication of high value data, increasing the frequency of updates, responding to data requests or automation of publication. We will also normalise and define what constitutes a dataset ... Over time, we also need to identify ways of measuring the outcomes and impacts of our open data initiative. In the meantime, the process metrics will provide the basis for an outcomes based evaluation for open data.” [6]

Alignment

There was good internal coordination between the different city departments, with the mayor leading the initiative. There was also collaboration with the private sector, principally medium-sized IT firms and small app developers as well as social networking firms.

The programme was carefully positioned to facilitate civil society participation: in 2012, Mayor Lee unveiled data.SFgov.org, a cloud-based open data site and the successor to DataSF.org, developed by Socrata, the Seattle-based start-up responsible for the initial platform. The published data was used to create apps for local residents: for example, the app developer SpatialKey created a visualisation tool that lets residents check for drug offences taking place near schools, while Elbatrop developed the tree identification app, SFTrees.

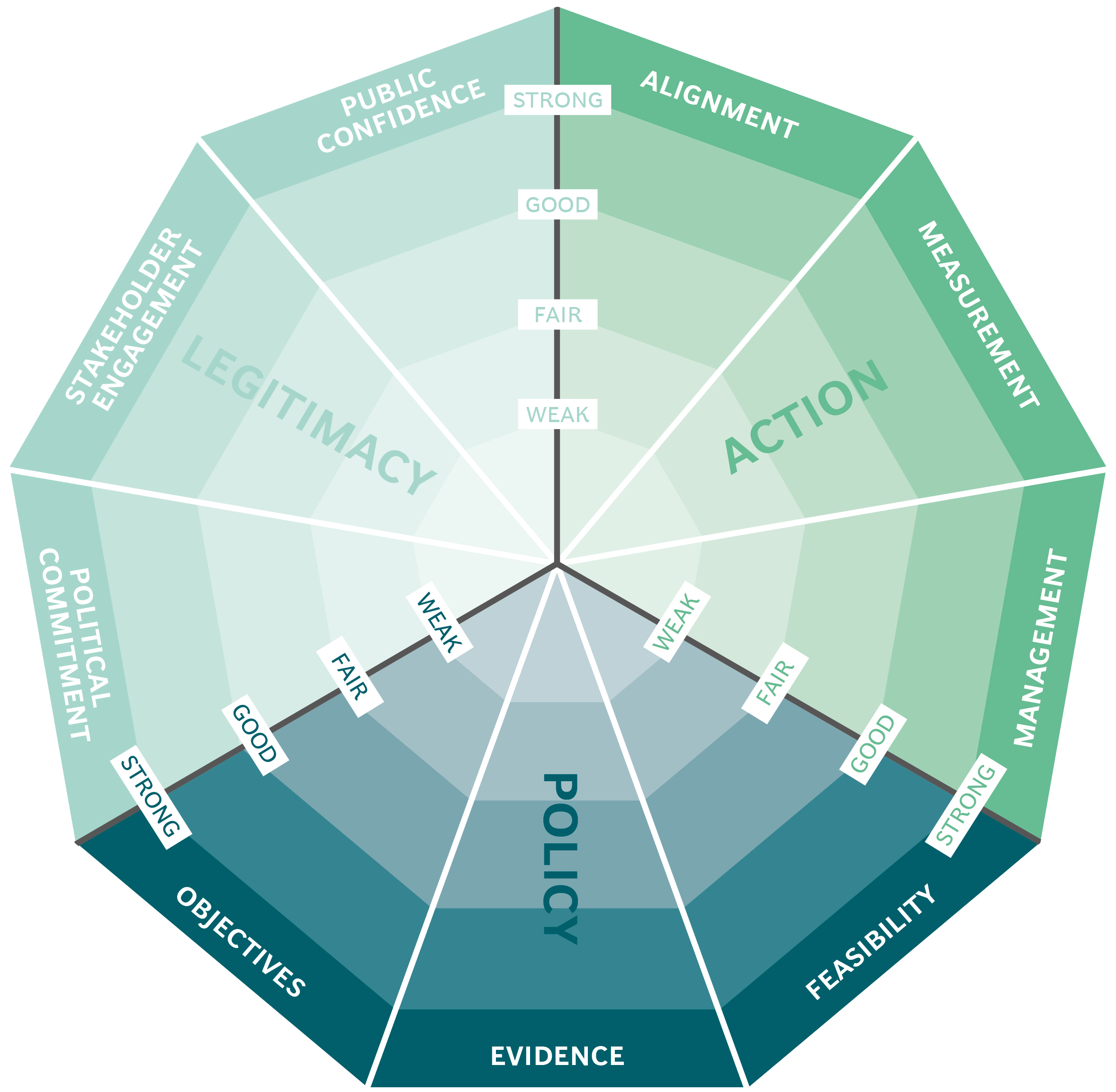

The Public Impact Fundamentals - A framework for successful policy

This case study has been assessed using the Public Impact Fundamentals, a simple framework and practical tool to help you assess your public policies and ensure the three fundamentals - Legitimacy, Policy and Action are embedded in them.

Learn more about the Fundamentals and how you can use them to access your own policies and initiatives.

You may also be interested in...

Mexico City's ProAire programme

National portal for government services and Information: gob.mx

Urban agriculture in Havana

The eco-friendly façade of the Manuel Gea González Hospital tower in Mexico City