AI Ethics in government: understanding its past, present and reimagining its future

.@theasnow continues our work w/ @3AInstitute @LorennRuster, thinking more deeply about 'applying a dignity lense' to #AI ethics

Share article"Our Dignity Lens believes gov should aim to enable a digital ecosystem & AI ethics instruments should be used to pursue this" @theasnow @LorennRuster

Share articleDoes our Dignity Lens resonate? Does this spark new ideas that we could explore together? Get in touch w/ @theasnow @LorennRuster

Share articleWe put our vision for government into practice through learning partner projects that align with our values and help reimagine government so that it works for everyone.

In our last blog, we spoke about our interest in pushing the thinking on the future of government AI Ethics instruments. We reflected on different waves of AI Ethics to date and we hypothesised that applying a dignity lens to current government AI Ethics Instruments in Australia, Canada and the United Kingdom might surface some interesting questions and potential pathways to reimagine what AI Ethics instruments could look like.

As expected, our thinking has changed and matured as we’ve researched and reflected and we believe this will continue to happen. Ultimately, we still believe applying a dignity lens to government AI Ethics instruments is a worthy endeavour. However, since our first blog, we’ve thought more deeply about our rationale for focusing on dignity at all and have attempted to become clearer about what we mean when we say ‘applying a dignity lens’. This blog post focuses on these two aspects of our work.

Drawing on definitions from Hicks, the Universal Declaration of Human Rights and The Ethics Centre, we have landed on the following working definition of dignity to guide us forward:

Dignity refers to the inherent value and inherent vulnerability of individuals. This worth is not connected to usefulness; it is equal amongst all humans from birth regardless of identity, ethnicity, religion, ability or any other factor. Dignity is a desire to be seen, heard, listened to and treated fairly; to be recognised, understood and to feel safe in the world.

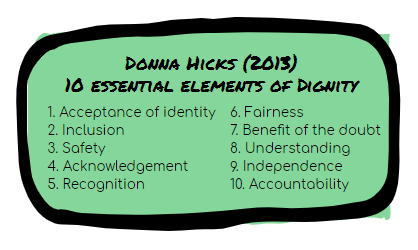

We have adopted the 10 essential elements of Dignity from Hicks’ (2013) Dignity Model as a way of understanding what it takes to implement dignity in practice (see Figure 1).

Figure 1: Donna Hicks (2013) 10 essential elements of dignity

Figure 1: Donna Hicks (2013) 10 essential elements of dignity

Dignity is a core value underpinning democracy:

“An authentic democracy is not merely the result of a formal observation of a set of rules but is the fruit of a convinced acceptance of the values that inspire democratic procedures: the dignity of every human person, the respect of human rights, commitment to the common good as the purpose and guiding criterion for political life. If there is no general consensus on these values, the deepest meaning of democracy is lost and its stability is compromised” Pontifical Council for Justice and Peace (2005).

In addition, an analysis of multilateral agreements (which Australia, UK, Canada and many others are a party to) such as the Universal Declaration of Human Rights, the OECD Principles on Artificial Intelligence and the G20 AI principles for “responsible stewardship of Trustworthy AI” feature dignity.

Given its importance to democracy, to human rights obligations and its presence in existing AI multilateral commitments, one might expect dignity to feature heavily in government ethics frameworks . However, through our research, we’ve found that when it comes to the core AI Ethics instruments of Australia, Canada and the UK, dignity is only overtly referenced once in the UK instrument, in descriptor text under the principle of Fairness.

We think this is a shortcoming. And we’re curious to understand why (at least at face value) dignity is seemingly overlooked.

As AI becomes more pervasive both within government itself and in the sphere of government regulation, the shaping and influencing impacts of AI Ethics instruments become ever more important. For this reason, we think a value such as dignity — which is fundamental to our democratic rights as citizens — needs to be reflected in government AI Ethics instruments.

To explore this further, we have developed a dignity lens and applied it in order to further understand government AI Ethics instruments and dignity.

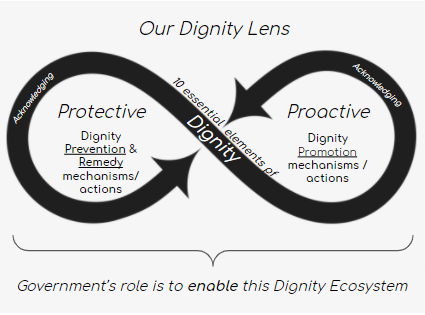

Our Dignity Lens has a fundamental belief at its core: we believe a goal of government should be to enable a Dignity Ecosystem and AI Ethics instruments should be one avenue by which this goal is pursued.

By applying a discourse analysis approach on Hicks’ book on dignity, we have identified two ways in which government can enable a Dignity Ecosystem:

Through Protective roles — this includes mechanisms and actions associated with preventing dignity violations and remedying dignity violations.

Through Proactive roles — this includes mechanisms and actions associated with promoting dignity.

Both Protective and Proactive roles are underpinned by acknowledging dignity.

We believe governments need to play both Protective and Proactive roles in order to uphold dignity. See Figure 2.

Figure 2: Our Dignity Lens

Figure 2: Our Dignity Lens

We are in the process of using Our Dignity Lens to investigate government AI Ethics Instruments. To do this, we are considering the following questions:

To what extent are Hicks’ 10 essential elements of dignity reflected in government AI Ethics instruments? ; and

What types of roles are being played by governments in relation to these dignity elements?

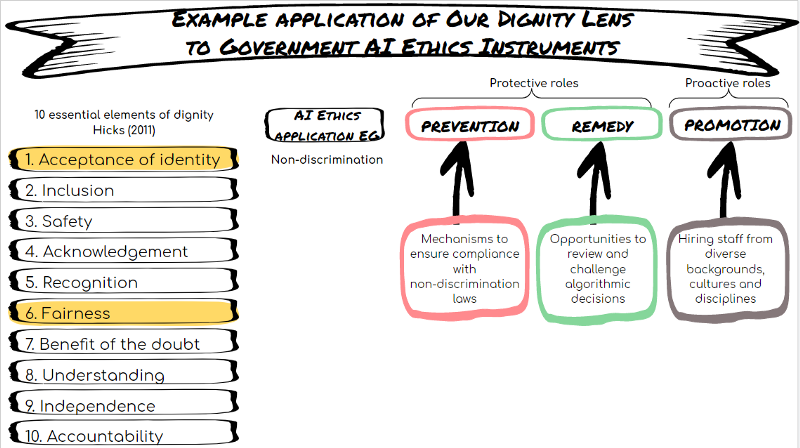

For example (see Figure 3), Hicks identifies ‘Acceptance of Identity’ and ‘Fairness’ as elements of dignity. In government AI Ethics instruments, these dignity elements could be pursued through mechanisms and actions associated with non-discrimination. In this case, governments play:

Protective roles through:

Preventing Dignity Violations: mechanisms to ensure compliance with non-discrimination laws; and / or

Remedying Dignity Violations: mechanisms to enable review and challenge of algorithmic decisions

Proactive roles through:

Promoting Dignity: actions of hiring staff from diverse backgrounds, cultures and disciplines

Figure 3: Our Dignity Lens in action as an analytic tool

Figure 3: Our Dignity Lens in action as an analytic tool

There are different approaches taken by different governments, with different elements of dignity covered and different roles played by governments. We are in the process of completing our initial analysis and making some sense of what we’re discovering. We hope to share our findings in a thought piece on this work in the coming weeks.

We firmly believe in the power of collective intelligence to help us ask better questions and interrogate our own biases with greater rigour. With this in mind, we invite:

Feedback on what you’ve read above — does Our Dignity Lens resonate? What do you find interesting? What is unclear?

Ideas — does this spark a new idea for you that we could potentially incorporate or explore together? Or trigger an idea you have already explored that may be relevant?

Potential contacts — are you someone we should be speaking with on this? Is there someone in your network that we should be contacting

To reach out, post in the comments below and/or contact us directly.

Together with the 3A Institute at the Australian National University, we will explore the nature of AI ethics instruments in government and how they might be reimagined. We invite you to share feedback, ideas and potential contacts as we continue with this research.